AI Race to 100 Percent on the Bar Exam

Posted: Sept. 23, 2025

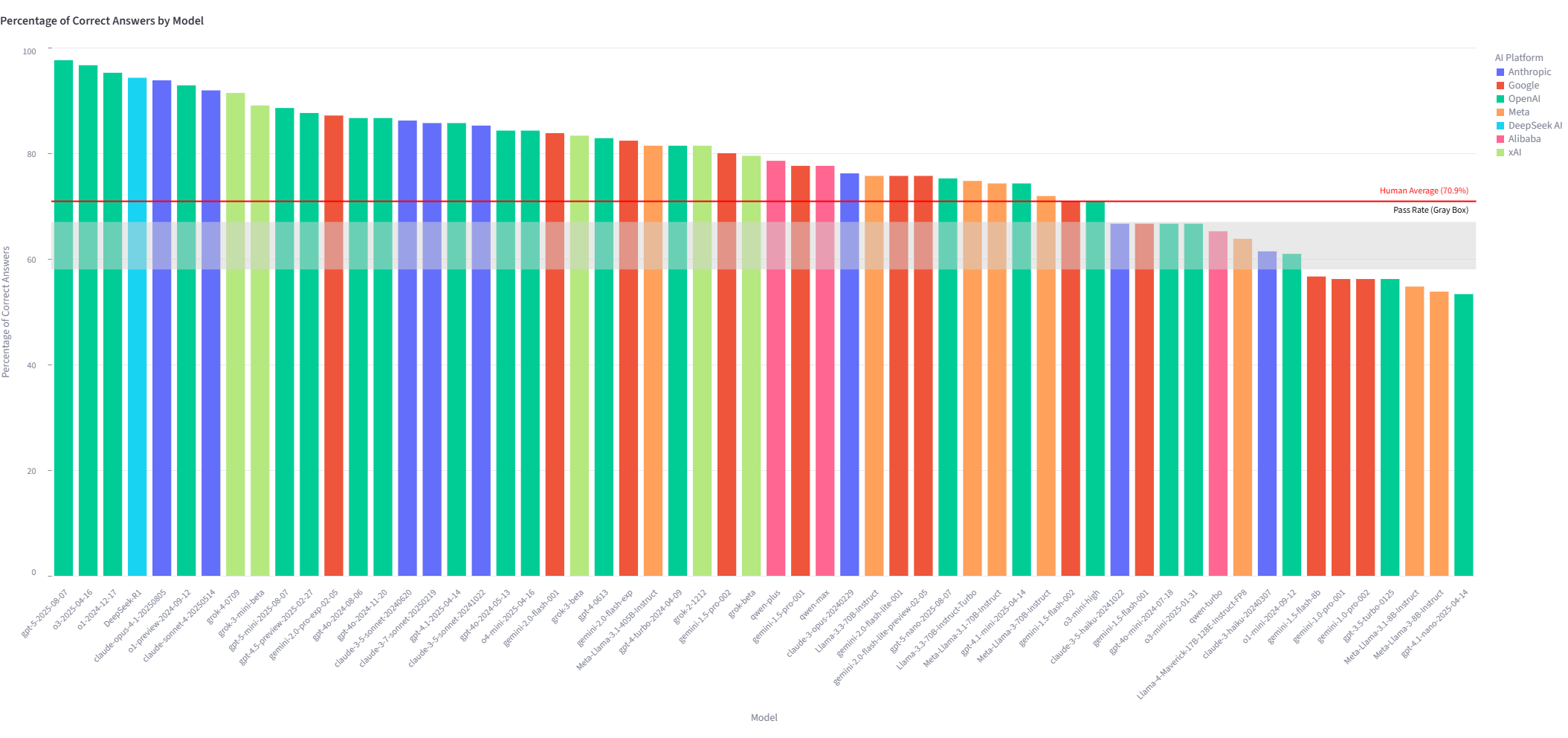

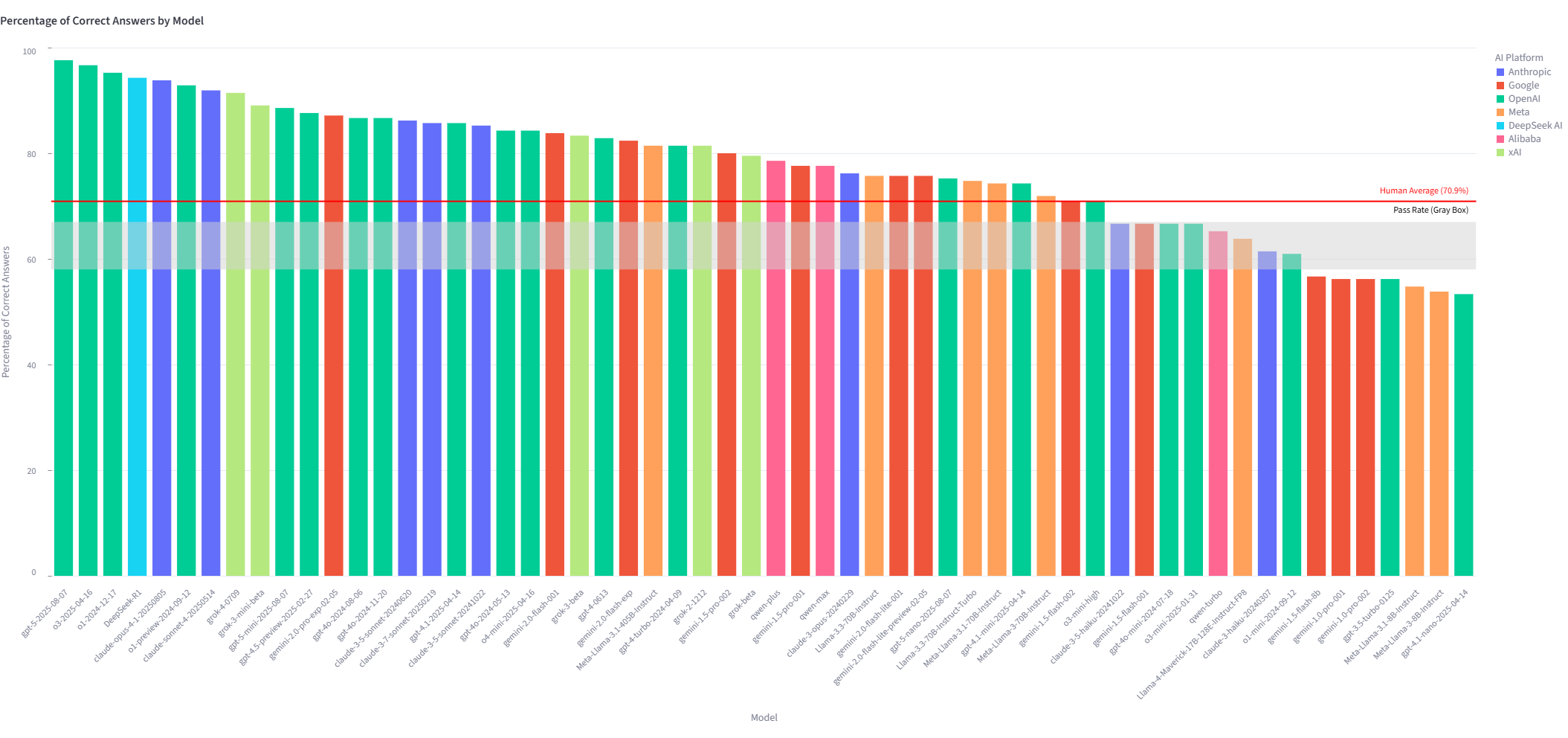

What happens when you sit today’s most advanced AI models down with a stack of bar exam questions? That’s exactly what we wanted to find out. Our team tested 59 different large language models (LLMs)—from companies like OpenAI, Google, Anthropic, Meta, and more on a practice Multistate Bar Exam (MBE) questions. For the non-lawyers, the MBE section of the bar exam is 200 multiple choice questions that equally cover Civil Procedure, Contracts, Evidence, Torts, Constitutional Law, Criminal Law and Procedure, and Real Property. The multiple choice format makes it much easier to quickly evaluate whether an A.I. is answering correctly. You can explore the data at the data dashboard located at https://ai-mbe-study.streamlit.app/.

The results? Pretty eye-opening.

- AI is raising the bar. More than two-thirds of the models scored higher than the average human test-taker. In fact, OpenAI’s newest model, Chat GPT 5, got a jaw-dropping 97.6% right. Four companies have models that score over 90% showing that it's not just one company that is capable of producing a model that can answer MBE questions.

- No legal crutches. These scores came from the base models, no special legal training, fine-tuning, or add-ons. In other words, the raw, general-purpose versions of these models are already outperforming most humans.

- Not all models are equal. Some absolutely crushed it in Constitutional Law, but stumbled on Torts. One torts question, in fact, fooled almost every model. You might pick one model over another depending on what area of law you are in.

- Cost doesn’t always match quality. Some of the priciest models weren’t the most accurate, while an open-source option, DeepSeek-R1, nearly topped the charts for a fraction of the cost.

- Speed matters too. Most models answered in under a second, but a few took minutes even if their accuracy was impressive.

Some legal AI startups boast sky-high valuations, but they may have a hard time proving their edge when free or cheap base models are already close to their level of accuracy. The market may soon ask: what are we really paying for?

AI has come a long way since ChatGPT’s launch in 2022, and it’s only getting better. But like any tool in law, it needs careful use, ongoing scrutiny, and a healthy dose of human judgment.

If you’re curious to dive deeper, you can check out the full study and methodology here. (The paper was finalized in May 2025 so only includes the models up to that point.)

The code and data are all open source. Check out all of the data at https://ai-mbe-study.streamlit.app/.